🧠 Crossing the AI Rubicon

This is my first serious collection of thoughts on Generative AI. We're going to cover:

- What these systems are and why they matter

- Impressive examples of what they can already do

- Brief thoughts on how they work and their ethical implications

- Where they are going

- What is uniquely valuable about human intelligence / labor

- How we will collaborate with AI in the future

- What I'm doing about all this

What is Generative AI?

Generative AI combines the encoding of vast corpuses of text and images—which act as training data—and a series of machine learning algorithms to turn text prompts into incredibly creative, intelligent, and useful visual and text responses.

I would rank this among the 3 most transformative pieces of technology I've encountered in my lifetime alongside the internet and smartphones. And I was not a crypto guy (for the record). Still, I know that's a dramatic statement but I'm not the only one:

ChatGPT is one of those rare moments in technology where you see a glimmer of how everything is going to be different going forward.

— Aaron Levie (@levie) December 3, 2022

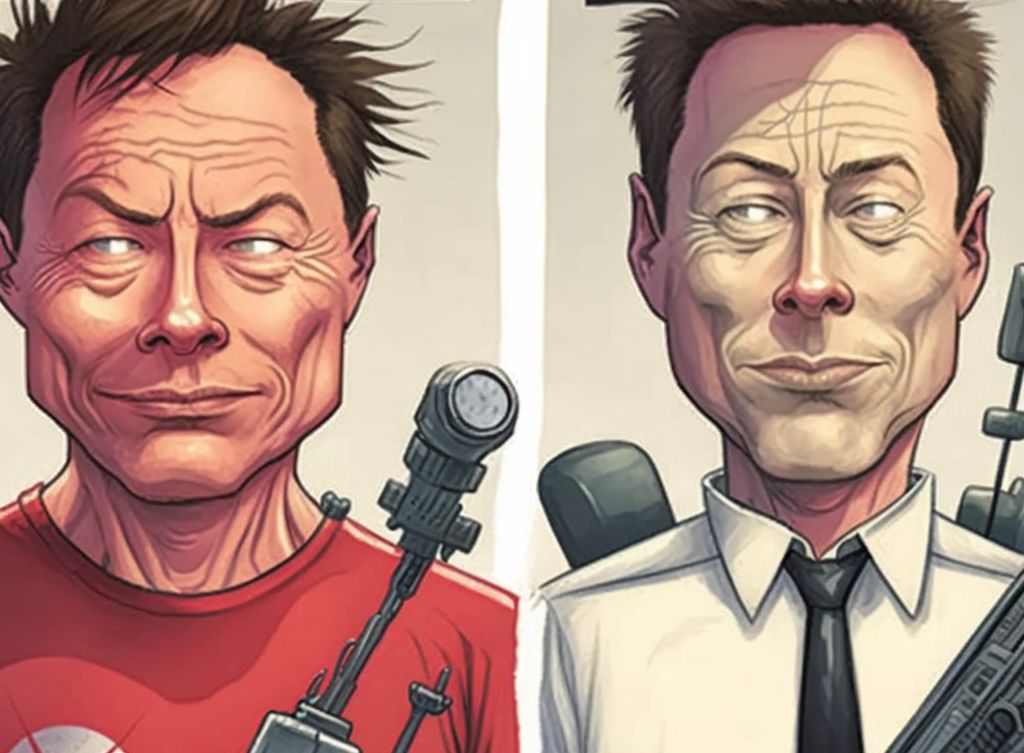

Here's an example of what these visual AI systems can generate:

the before and after pic.twitter.com/vgMh95j7p6

— Julie W. Design (@juliewdesign_) December 8, 2022

I shared a number of examples last week on ChatGPT (by the way, it's currently free to use—which won't last—so please sign up and give it a shot yourself) but here's a new one—reading contracts and answering questions about them (lawyers hate this!)

For example, they are able to ask questions about long contracts and get correct answers with citations to the relevant paragraphs (see below) pic.twitter.com/7lgJk3VGI2

— Harrison Chase (@hwchase17) December 1, 2022

And in a completely different realm: explaining topics while being obsessed with your pumpkins.

I can't compete with this pic.twitter.com/YdQ87LWIst

— Keith Wynroe (@keithwynroe) December 1, 2022

Oh and while no one is saying that AI can serve as an actual lawyer or doctor today, they can pass the bar exam and is borderline passing the United States Medical Licensing Exam (USMLE)

#OpenAI's ChatGPT is ready to become a lawyer, it passed a practice bar exam! Scoring 70% (35/50). Guessing randomly would happen < 0.00000001% of the time pic.twitter.com/pnusiuHr1p

— Kenneth Goodman (@pythonprimes) December 10, 2022

I had #OpenAI's ChatGPT take a USMLE 119 question exam. It scored 70%

— Kenneth Goodman (@pythonprimes) December 11, 2022

I removed questions that depend on an image. Questions have 4-6 choices. https://t.co/CEZh7VMJUn pic.twitter.com/Q56pNtlzxD

So yeah, this is serious. Let's discuss.

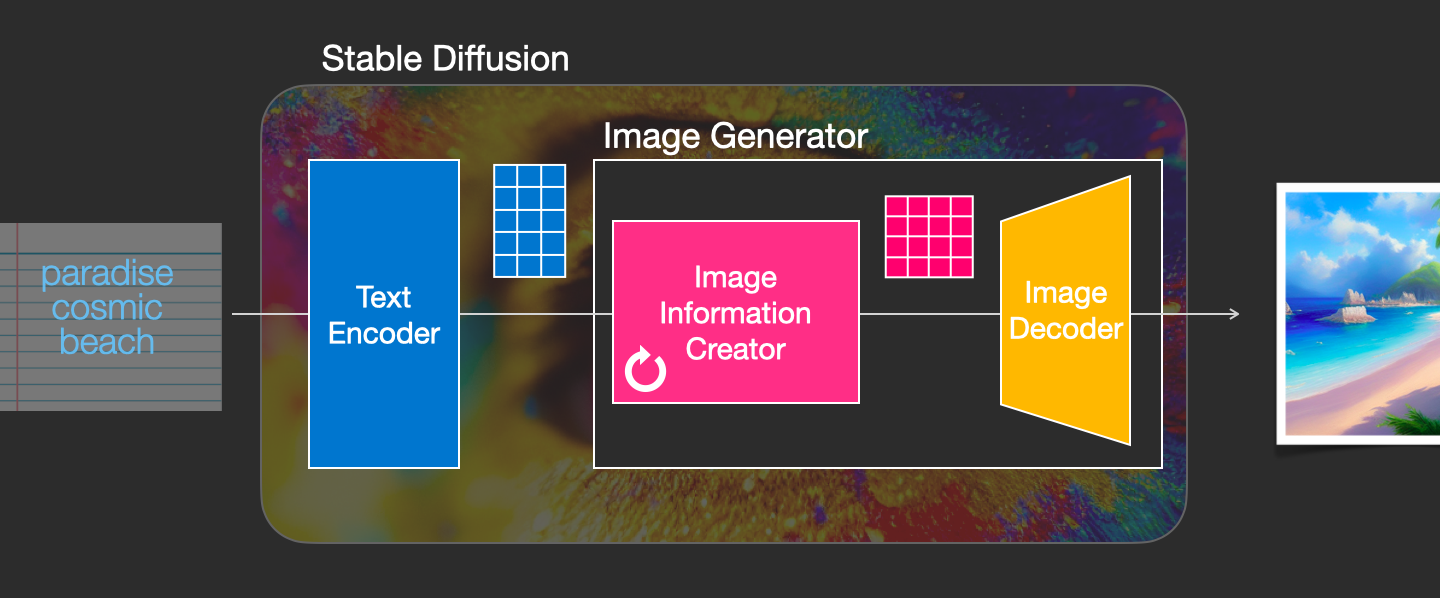

How They Work

I'm not the best person to explain how these systems work in detail but here's a good primer for visual generators like Stable Diffusion, and here's an explanation of large language models that power text generators like ChatGPT.

The big takeaway I've gotten so far is that more data increases it's intelligence / sophistication. These models are expensive to produce and OpenAI, led by Sam Altman is funded by the likes of Elon Musk, Reid Hoffman, Peter Thiel, and Microsoft (which put in $1 billion in funding in 2019).

Is it unethical?

Many people have criticized how images were used as training without the permission of the artist. But copyright is an extremely fuzzy concept (trust me, I'd know) and the AI does not (for the most part) generate exact replicas of prior art.

If you look at how the algorithms work, it's much more like a human artist who has seen thousands of images and then uses their own wetware (aka their brain) to create work that they find appealing and interesting—and can imitate in a very high resolution way the styles of many different artists.

In 2018, Noah Yuval Harari published 21 Lessons for the 21st Century, where he wrote:

Nevertheless, in the long run no job will remain absolutely safe from automation. Even artists should be put on notice. In the modern world art is usually associated with human emotions. We tend to think that artists are channeling internal psychological forces, and that the whole purpose of art is to connect us with our emotions or to inspire in us some new feeling. Consequently, when we come to evaluate art, we tend to judge it by its emotional impact on the audience. Yet if art is defined by human emotions, what might happen once external algorithms are able to understand and manipulate human emotions better than Shakespeare, Frida Kahlo, or Beyoncé?

At the time, it might have seemed laughable. Now, it's a reality.

As it stands, right now, right this second, without extrapolating, it seems clear that it's easy to generate a cover that's better in every aspect than l, idk, 75% of self-published novels on Amazon.

— Kendric Tonn (@kendrictonn) December 3, 2022

While I'm sympathetic to many already struggling creators who will find fewer commissions and opportunities, I also think the genie is out of the lamp on this one. While there are probably some legal, technological, and social mechanisms to slow the use of these tools—I've heard of calls to boycott AI generated art—we cannot stop their widespread use. We can't even tell necessarily what's AI generated, especially if you do any post-processing to it.

One small thing I try to do out of respect to artists is never use individual artists as part of my prompts, e.g. "painting of a couple in a warm embrace in the style of Artist Name" which is often used by people looking to achieve a specific "effect".

This may be a controversial take, but I'd rather look for ways to compensate or support people of all kinds whose lives will be displaced by AI rather wage a moral outrage (and frankly un-winnable) war against the usage of images.

The ubiquity of AI

Kevin Kelly wrote a book in 2016 about the 12 technological forces shaping our future. One key point was that AI would be infused into everything. I didn't really understand what that would look like at the time, but now it's coming into focus.

“The AI on the horizon looks more like Amazon web services – cheap, reliable, industrial-grade digital smartness running behind everything …You’ll simply plug into the grid and get AI as if it was electricity …There is almost nothing we can think of that cannot be made new, different, or more valuable by infusing it with some extra IQ. In fact, the business plans of the next 10,000 startups are easy to forecast: Take X and add AI

—Kevin Kelly The Inevitable (Chapter 2: Cognifying)

For instance:

- AI filters on social media.

- AI suggestions in email, text editors, dating apps.

- AI answers to help center questions and customer service agents.

- AI recipe suggestions for the food in your fridge

What's remarkable about these technologies is that they are widely available to use. Stable Diffusion is open-source—anyone can download the code and run it on their own computer, or rent computing power on something like Google Colab for $8/mo.

OpenAI is allowing us of ChatGPT for free at the moment (to a great cost) and will eventually start charging, but there are open-source alternatives like Bloom and LLM's developed by DeepMind by Google.

It's getting better very quickly

There's been handwringing about the role of human intelligence in the world of AI and automation for a while, but perhaps it felt like something to deal with later. Here's Bill Gates in 2014:

“Software substitution, whether it’s for drivers or waiters or nurses—it’s progressing. Technology over time will reduce demand for jobs. . . . Twenty years from now, labor demand for lots of skill sets will be substantially lower. I don’t think people have that in their mental model.”

8 years later, this quote now feels incredibly urgent given how quickly these systems are evolving and improving. Midjourney, my favorite visual generative AI, has improved dramatically from v1 to v4 in just a year-and-a-half.

Holy crap, the leap in new model v4 on @midjourney. This is thumbnails of a penguin in Venice, v3 on left, v4 on right --no specific style request. #AIart pic.twitter.com/odwjNMcRGH

— @arnicas@mstdn.social (@arnicas) November 5, 2022

So where do we fit in as humans?

Geoff Colvin's book, written back in 2015, offers a lot of good advice here. As a senior editor of Fortune, he spoke to business leaders, military commanders, leading medical facilities, and of course technologists to understand what the implications of accelerating AI advancements would mean for the next generation. He was deeply concerned with how to advise parents and their children about preparing for the future.

His core takeaways were:

- Human desire is infinite so there will always be some work people can do. The question is will the work be meaningful and high value or denigrating and low value

- What humans will likely still do best is empathize with other humans, which enables us to persuade, inspire, entertain, and engender trust.

"Empathy is the foundation of all the other abilities that increasingly make people valuable as technology advances. It means discerning what some other person is thinking and feeling, and responding in some appropriate way."

— Geoff Colvin, Humans Are Underrated

- People still want other people in positions of leadership and responsibility—even if a business's strategic decisions is driven by an AI, we will want to hold a human CEO responsible for mistakes and to act as a check against bad AI decisions.

- Same for an AI-powered surgical procedure or an AI-powered judicial system or an AI-powered military advisor.

- Human beings will also do better in chaotic environments where what the problem is, what our goals are, and how people are thinking about things keeps changing. This quote made me think a lot about working at Meta:

"In addition, there are problems that humans rather than computers will have to solve for purely practical reasons. It isn’t because computers couldn’t eventually solve them. It’s because in real life, and especially in organizational life, we keep changing our conception of what the problem is and what our goals are."

— Geoff Colvin, Humans Are Underrated

- Human beings also collaborate a lot better when working in person and spending extended time together (this seems very relevant given the discussions about remote work and "back to office" rhetoric.

It feels to me, we’ll see a polarisation of tech companies in groups of:

— Gergely Orosz (@GergelyOrosz) November 10, 2022

1. Hybrid work. 1-3 days in the office/week

2. Office only. Like Twitter, Tesla and others that might follow

3. Full remote. Lots of smaller startups and a few larger companies (Automattic, GitLab etc)

- Colvin tells the story of the US team in the Ryder's Cup, a major golf tournament where countries enter as a team rather than individual competitors. In 2008, the US chose Paul Azinger as the captain of the team, and he chose an unorthodox strategy of putting the players into pods of 4, grouping aggressive, chatty, and super-calm players together.

Then, with all assembled in Louisville on the Monday evening of tournament week, he told each pod, “You will practice together, play together, and strategize together. You are a team this week. Barring injury or illness, I will never break you apart.”

This approach was unprecedented, and so were the effects. Players virtually reversed their typical behavior. "I usually think about my own game in these team matches," said Jim Furyk, who had played on America's six previous Ryder Cup teams, "but in Louisville I was thinking about Kenny [Perry, a member of his pod], asking his caddie how Kenny was feeling, whether he wanted me to talk or keep quiet. That's usually not my style, but I tried to put myself in Kenny's shoes, playing before his people in his home state of Kentucky." The players communicated, supported empathized. Their pods played like real teams.

— Geoff Colvin, Humans Are Underrated

- The team won and with the largest margin of all the rare US victories in 25 years and without Tiger Woods who was hurt that year.

- In other worse, teams of people who actually get to know each other in person can often create the strongest results. Colvin went on to cite a bunch of work that showed how people collaborated worse when remote, which again, must be concerning for leaders of distributed teams.

- Colvin also explored how doctors were being trained to provide a more empathetic experience. Assuming medical diagnosis will soon be AI powered (see this Tiktok for one Dr's reaction) the role of the human physician will be focused on providing care, answering questions in a way the patient will understand, and counseling them on next steps.

- The training emphasized things like responding to patient's emotion cues immediately rather than coming back to them—because they just escalate or shut down. And not saying things like "I understand" since it can feel patronizing and is false.

"A striking bit of data from the Cleveland Clinic’s experience is that while 70 percent of program participants found the empathetic relationship training useful, only 10 percent expected it to be. Only 10 percent!"

— Geoff Colvin, Humans Are Underrated

So in other words: listening to others, understanding their inner emotional and mental landscape, expressing authentic and appropriate emotions, and using verbal and non verbal communication to build stronger relationships are critical skills that AI will have a harder time doing well.=

Teaming up with AI

Ok, so beyond getting better at connecting with other human beings (extroverts unite!) what else is there? Learning to partner with AI.

Kai Fu Lee, a longtime AI researcher, former CEO of Google China, and technology investor, has developed a 2x2 matrix for how humans should expect to interact with AI in his 2018 book AI Superpowers: China, Silicon Valley, and the New World Order.

He defines two spectra: structured vs unstructured environments and asocial vs social environments.

- Human labor will be in the "Safe Zone" in the social, unstructured environments where it will be hard to train an AI to train pets, offer physical therapy, and style someone's hair and face.

- Meanwhile, structured, asocial work is most easily replaced, such as fast food cooks, garment factory workers, and cashiers, he calls this the "Danger Zone"

- The other categories he calls "Human Veneer", for social but structured environments like many hospitality jobs—where people want to interact with other people, but the labor may mostly automated.

- The last one are unstructured but asocial work like cab drivers, plumbing, and mechanics. It will be harder to train the AI to navigate all situations, but this "Slow Creep" area will eventually be replaced.

This provides 4 strategies or ways humans will work with AI—3 of which will involve collaborating with the AI. We will ultimately need to see AI as partners in our effort.

Instead of starting from scratch, we may want to see what the AI thinks, select or remix an option, and collaborate towards a solution. This is not dissimilar to how many executives operate today—receiving proposals and ideas from their staff and providing feedback before making a final choice. Recall the Colvin's point about how no one wants to take orders from an AI or hold an algorithm responsible for legal judgement.

In a heartwarming conclusion to what is a fairly sobering book about how China is quickly accelerating past the US in terms of its technological innovation and the growth of its tech companies, Kai Fu Lee shares a revelation he had after recovering from cancer about the importance of relationships.

“I had used my fame in China to educate and inspire young people. I had done nothing to deserve dying at the age of fifty-three. Every one of those thoughts began with “I” and centered on self-righteous assertions of my own “objective” value. It wasn’t until I wrote down the names of my wife and daughters, character by character in black ink, that I snapped out of this egocentric wallowing and self-pity. The real tragedy wasn’t that I might not live much longer. It was that I had lived so long without generously sharing love with those so close to me.”

This was part of a turning point in his perspective on technology and AI and lead to the following realization:

“AI ever allows us to truly understand ourselves, it will not be because these algorithms captured the mechanical essence of the human mind. It will be because they liberated us to forget about optimizations and to instead focus on what truly makes us human: loving and being loved.”

Kai Fu Lee—AI Superpowers

Now it can be hard to take Lee at his word given how much he still focuses on technological development and expanding his VC firm and book writing, etc. But I am willing to accept that he believes loving human relations is important to him and a message he wants to share with the world.

What I'm doing about all this

First off, I realize I can continue refining my humanity and my empathy skills. I hope to take more listening, relationship, and communication courses in 2023 to sharpen this critical skillset.

Second, I'm trying to create content that is more human and expressive. This is why I've been leaning into video—it's much easier to show emotion and create a deeper bond with your audience.

For instance how tired I am at 16:34 at this video

Third, I'm trying to learn more about how these Generative AI systems work more deeply and uncover their creative potential. You've seen my explorations with Midjourney through the cover images of many of the last few newsletters, including this one.

Meanwhile, OpenAI has an API, and now Zapier lets you more easily interact with it without needing to write code (link). I used it to generate a fun story synopsis creator. I love random generators and imagining interesting stories, so loved on quickly I was able to put this together. Feel free to duplicate the Google Sheet (link) and the Zap here.

(I recently made my Twitter private so you'll have to request to follow @jasonshen to see the following tweets)

Here's an example of a precious genius childand a grizzled therapist who want to discover the secrets within a newly found planet but they'll have to go against their community, written for the space opera genre.

— Jason Shen 🚢✏️ (@JasonShen) December 10, 2022

Loved the twist! pic.twitter.com/vv64TFquHV

The story follows a talented genius child, and a grizzled therapist, as they team up to discover the secrets of a newly found planet. Despite coming from different backgrounds, they unite to search for the hidden truths of the strange new planet. However, their mission is put to the test when their community is not supportive of their mission.

With their backs against the wall, the two protagonists use the latest technology to go beyond the boundaries of their community and traverse the unknown planet. As they make their way through the planet, they find a strange monument containing a powerful artifact, revealing the secrets of the planet.

Unexpectedly, the artifact reveals that the planet is actually a prison world, created by another powerful species to contain a dark force. The dark force was the source of the planet's secrets, yet the two protagonists must now find a way to free the planet from its clutches.

In the end, the two protagonists are able to drive away the darkness and save the planet. They then use the powerful artifact to create a shield around the planet, ensuring that the dark force is never able to return. With their mission successful, the two protagonists are hailed as heroes by their community and their stories are remembered for generations to come.

I encourage you to give these products a try and start understanding their power, potential, and limitations.

- Try Midjourney for free (requires a free Discord account)

- Try DALL-E for free

- Try Stable Diffusion for free

- Try ChatGPT for free

- Try Lex for free

Do you have questions or thoughts on Generative AI? Reply back—I'd love to talk more with you about this.

Recent Issues

More Resources and Fun Stuff

- Book Notes: Summaries / quotes from great books I've read

- Scotch & Bean: a webcomic about work, friendship, and wellness

- Birthday Lessons: Ideas, questions, and principles I've picked up over the years

- Career Spotlight: A deep dive into my journey as an athlete, PM, founder, and creator.